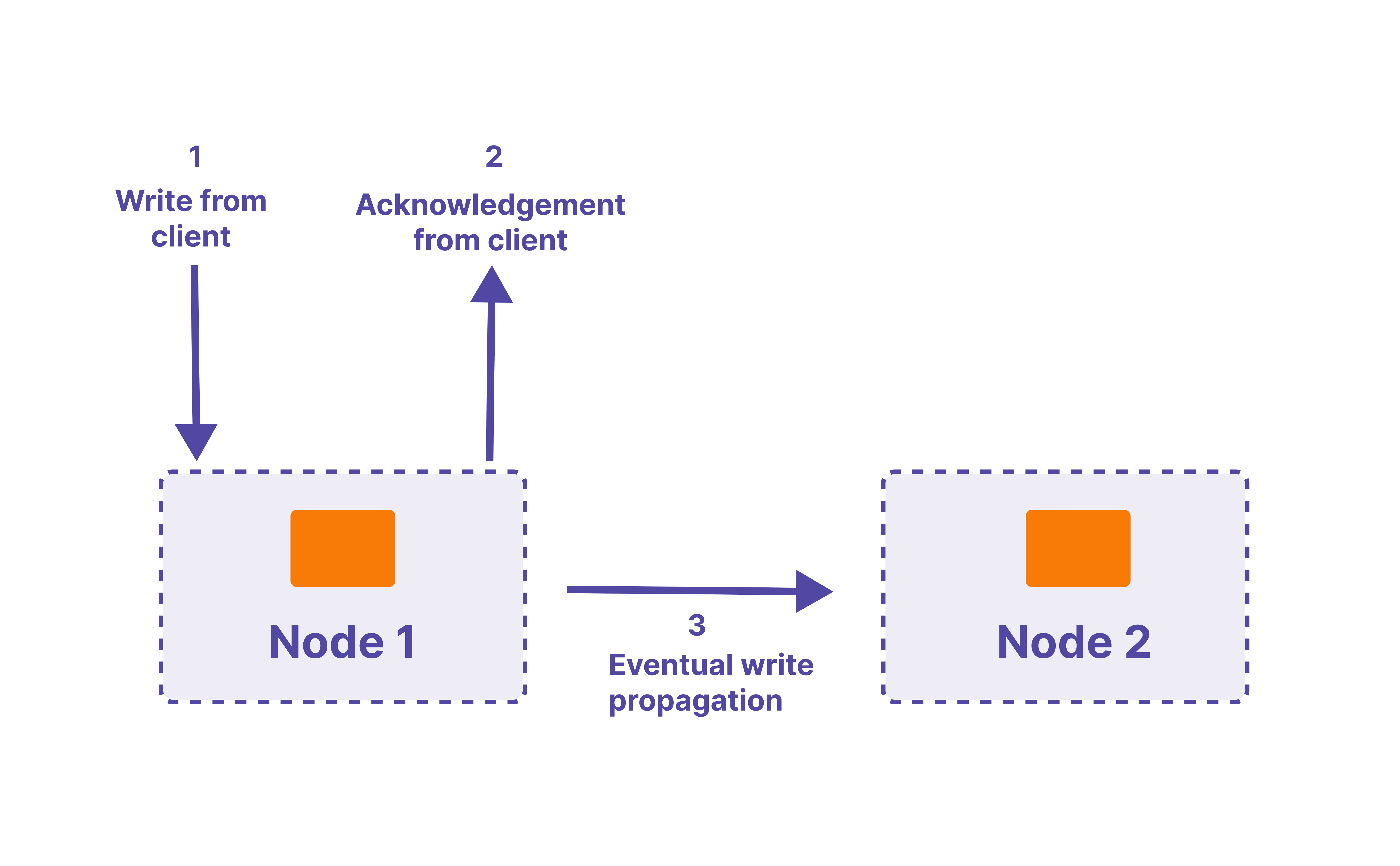

In modern data platforms—especially during cloud migrations or modernization projects—eventual consistency is a common trade-off made for scalability and availability. NoSQL databases like Cassandra, DynamoDB, or MongoDB embrace this relaxed consistency model, where temporary inconsistencies are allowed, and data eventually converges to a correct state.

But this raises a pressing question during migration QA and modernization initiatives:

How do you assure data quality when reads can return outdated or inconsistent values?

This guide explains the critical data quality metrics for eventually consistent environments—and why automated, agentic validation is becoming essential.

Why Eventual Consistency Exists

Unlike traditional strong consistency—where every read returns the most recent write—eventual consistency favors system uptime, speed, and horizontal scale:

- Higher Availability: Systems stay operational even during network partitions.

- Faster Write Performance: Quick write acknowledgments without waiting for synchronization.

- Effortless Scalability: Easily handle massive data growth by adding nodes.

However, with these benefits come serious QA blind spots, especially during migration cutovers, legacy upgrades, or multi-system consolidations.

Rethinking Data Quality Metrics for NoSQL

During migration QA, common data quality metrics like accuracy or completeness still apply—but they need to account for data staleness, propagation lag, and schema flexibility.

Without accounting for these factors, business sign-offs during migration projects become risky and unreliable.

Why Manual QA Fails in Distributed Systems

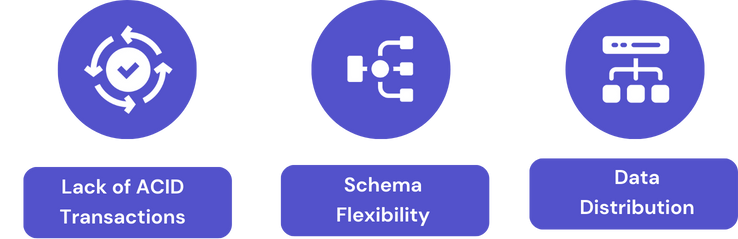

In NoSQL migrations, traditional SQL-based sampling or manual scripts fail on three fronts:

- Schema Flexibility: Absence of strict schemas makes structural QA harder.

- Transaction Relaxation: Eventual consistency ignores ACID guarantees, exposing hidden anomalies.

- Scale Complexity: Validating billions of distributed records during migration manually is unsustainable.

The result?

Missed errors, business distrust, and go-live regressions—especially across phased cutovers or multi-region data flows.

How Intelligent Validation Fixes This

Agentic platforms like Datachecks solve for post-convergence data trust with:

- Post-Sync Assurance: Automated checks run after data propagation stabilizes—ensuring final-state integrity.

- Agent-Driven Rules: Declarative rules dynamically adapt to schema drift and flexible structures.

- Business-Logic Validation: Validate downstream critical calculations, e.g., premiums, balances, or claim status.

- Cutover Readiness Signals: Real-time validation pipelines offer clear readiness signals during go-live rehearsals.

Example: Migration Validation in Action

No custom scripts

Agentically interpretable

Scales across thousands of collections

From Legacy to Modernized Confidence

Whether you’re:

- Migrating NoSQL-to-NoSQL (Cassandra → DynamoDB),

- Moving RDBMS → NoSQL (Oracle → MongoDB), or

- Consolidating across multi-cloud environments,

agentic validation ensures faster QA cycles, cleaner cutovers, and business-trusted data pipelines.

Key Takeaways

- Eventual consistency requires post-convergence quality validation.

- Manual scripts or SQL queries break down at NoSQL scale.

- Agentic QA platforms enable real-time, schema-flexible, business-aligned testing.

- Build confidence in migrations and modernization projects—without over-relying on manual effort.

.svg)